High-quality, context-aware data annotation is essential for effective AI model training, and partnering with expert, scalable BPO providers can enhance accuracy, reduce bias, and improve operational efficiency across industries.

Read Time: 12 minutes

Table of Contents

Introduction

AI is only as good as its data — but who labels that data matters even more than the data itself. The person or program assigning meanings to data determines the success of the model, literally teaching it to understand human language in a particular way. Poor annotation results in poor outcomes while accurate annotation with contextual nuance leads to smarter, more scalable AI solutions.

Learn how to capture the successes of expert data annotation in this comprehensive article.

Key takeaways

- High-quality data annotation is critical to AI performance. Accurate, context-rich annotation removes bias and misclassifications and boosts AI model performance.

- Successful annotation efforts require diverse teams, clear guidelines, regular training, and ongoing quality control to ensure objective, culturally aware labeling.

- Data annotation outsourcing provides scalability, specialized tools, multilingual support, and improved efficiency. The right partner ensures regulatory compliance, integrates with existing systems, and implements high-quality control pipelines, ultimately accelerating AI development while minimizing operational costs.

What is data annotation and why does it matter?

Data annotation is the process of labeling or tagging data to make it understandable for machines. AI programs use Machine Learning (ML) and data annotation to learn and replicate human language. Human annotators or programs group similar words and phrases, labeling them for AI to then interpret and generate based on prompts.

Artificial intelligence is the talk of the digital world, dominating industries and revolutionizing tasks, workforces, and behaviors between businesses and customers. Data annotation matters because it defines the way companies interpret data, streamline processes, and automate communications with audiences. As companies understand consumers more accurately, they can make strategic, data-driven decisions to enhance that communication and empower customers to help themselves.

Core types of data annotation

Data come in all forms, so dividing data annotation into types helps annotators and programs efficiently sift through and accurately label vast amounts of information according to type and purpose. These four core data annotation types make up your efforts:

- Text annotation tags text, including words and phrases, to denote sentiments, relationships, parts of speech, and intents.

- Image annotation labels visual images and components in images to identify objects, people, and actions.

- Video annotation identifies and tracks objects, recognizes actions and poses, segments videos, detects events, and labels other relevant information in videos.

- Audio annotation labels sounds, speech, audio events, and emotions while transcribing and training voice assistants, accent neutralization models, and other audio-based models.

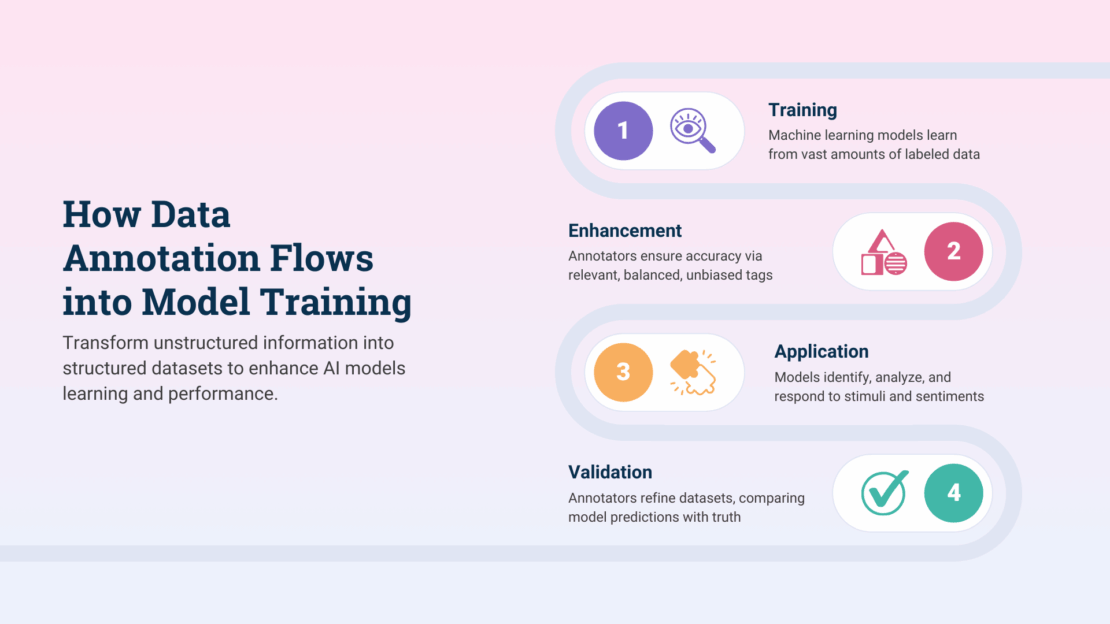

Role in AI model training

Data annotation is the building block of AI learning methods. As data is assigned meaning, AI models learn the content, empowering the algorithm to build meaningful datasets. These common AI techniques use data annotation to interpret the visual world:

Supervised learning trains algorithms on inputs as well as outputs, or labeled datasets, to make predictions or decisions based on new, unseen data.

Primary goal: To identify patterns within the data, allowing the machine to map inputs to correct outputs.

Deep learning relies on neural networks with multiple layers (deep neural networks) to model intricate patterns and representations within data, forming the foundation of many AI apps.

Primary goal: To teach computers to learn and make decisions independently.

Natural Language Processing (NLP) enables computers to understand, interpret, and generate human language through computational linguistics, machine learning, and deep learning.

Primary goal: To respond to natural language, bridging the gap between human communication and machine understanding.

Computer Vision (CV) helps machines interpret and understand visual information from the world, analyzing images and videos to recognize objects, people, and scenes.

Primary goal: To perceive, recognize, and react to visual stimuli.

READ MORE: How AI is Transforming Contact Centers CX: 5 Innovations You Need to Know

Impact on AI performance and learning curves

Data annotation’s impact surpasses simple classification. High-quality data annotation is more accurate, detailed, and contextually rich, vastly improving the performance of AI models and reducing learning curves. As a result, speed to proficiency decreases, becoming an efficient and a dynamic tool for users.

But how does this play out in real life? Here are a few examples of data annotation in application:

- Data annotation services for computer vision include classifying images as indoor or outdoor, marking specific points in faces or landmarks, and enabling self-driving cars to recognize and respond to surroundings.

- Multilingual data annotation tags and categorizes content across languages, helping models learn to understand and generate communication in multiple languages.

- Data annotation for medical imaging marks specific areas in X-rays, MRIs, CT scans, ultrasounds, or PET scans to make them machine-readable for AI systems, empowering them to detect abnormalities and assist professionals in diagnosis and treatment planning.

READ MORE: What is Agentic AI?

Want to scale your business?

Global Response has a long track record of success in outsourcing customer service and call center operations. See what our team can do for you!

How poor data annotation leads to AI model failure

Inefficient attention to data annotation introduces errors, drastically reducing the effectiveness of your AI model. Learn how to ensure quality control in outsourced data annotation by tracking these common stumbling blocks of poor data annotation.

Model bias from inconsistent or incorrect labels

Accurately tagging data is vital to the reliability of your AI model. Inconsistent labeling introduces model bias, or distortions of key data. Common reasons for model bias include the following:

- Human annotator subjectivity

- Cultural views

- Unreliable tools

- Non-compliant methods

These inconsistencies cause the model to struggle to define and sort data, leading to significant consequences on the model:

- Poor performance on diverse data

- Amplified biases upholding social inequities or stereotypes

- Loss of trust in AI systems

- Poor performance on new, unbiased data

While complex pieces of data or cultural significance may be difficult to objectively define, setting clear standards is vital to the success of your AI tool and your interactions with customers.

Reduced accuracy and misclassifications

As human annotators inconsistently label data, inaccuracies arise, reducing the performance quality of the AI model. Mislabeled or missing annotations muddy the waters, making it harder for teams and algorithms to interpret and establish guidelines.

Inconsistencies can have drastic results. Misclassifications of medical imaging, for example, can lead to misdiagnoses, affecting patient care and safety. Human assumptions can derail objective facts, accumulating in poor training data.

It can be difficult to trust a third party to correctly label these data, but industry experts will come comfortable in accurately applying following guidelines and regulations.

Hidden costs of managing inaccuracies

Inaccurate annotation affects your operational efficiency, forcing you to increase time, energy, and resources reacting to or resolving problems.

- Re-training: What’s not accurately tagged the first time must be re-tagged later, while you also have to invest in training your team and new-hires on guidelines and best practices.

- Error rates: A key performance indicator for data annotation teams, error rates rise and can be difficult to improve with inconsistent tagging.

- Customer churn: Poor data annotation and high amounts of errors reduce customer satisfaction and trust, forcing you to go to greater lengths to win back customers.

- Compliance and ethical issues: Your business may face fines as a result of compliance or ethical issues.

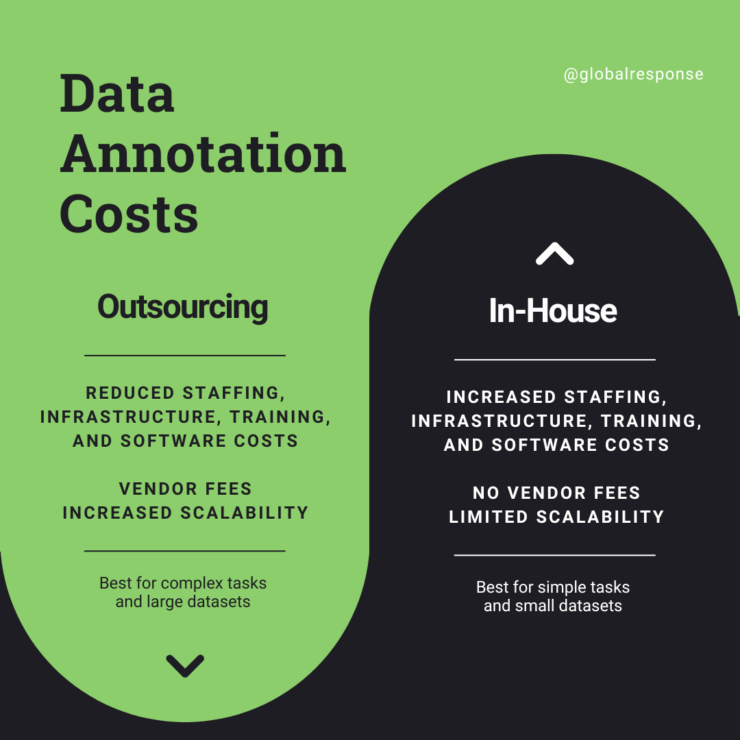

The real-world consequences of bias and inaccurate tagging affect the operational efficiency and financial health of an organization. Outsourcing reduces risks by streamlining operations via advanced technology integrations and human-in-the-loop data annotation. Review this cost comparison of in-house vs outsourced data annotation to see how outsourcing can help you do and achieve more without sacrificing costs.

Even with expert support, you should still know data annotation best practices to ensure your strategies align with a BPO provider’s and your goals. Follow these tips to learn how to ensure quality control in outsourced data annotation.

- Access a diverse pool of annotators. Diversity helps your business minimize biases and catch inaccuracies you might have otherwise overlooked.

- Balance datasets. Relying solely on accuracy can teach classification models to underrepresent minority datasets because training datasets have taught them to follow certain rules. Dataset balancing collects more data — the right data — and tests a model’s performance across many metrics to improve the contextual accuracy of your data annotation.

- Clarify guidelines and quality standards. Constantly updating and refining your guidelines will help annotators overcome ambiguity in data tagging.

- Regularly train for bias awareness. Teach your agents about sampling bias, annotator bias, cognitive bias, cultural bias, and label imbalance bias to help them mitigate their own risks and make more objective annotations.

Implement regular quality checks. Utilize advanced technology to track performance and data annotation accuracy assessments such as inter-annotator agreement, Cohen’s kappa, Fleiss’ kappa, Krippendorf’s alpha, and the F1 score.

What makes a good data annotation partner?

A good data annotation partner leads your data annotation efforts with expertise, precision, and vision. They should not only know your industry but also have the flexibility to customize their data annotation services to your needs, challenges, and goals. A good data annotation partner swiftly implements scalable data annotation solutions to accommodate demand surges without compromising quality or performance. Your partner should connect you with cutting-edge technology and data annotation experts skilled at handling the complicated and nuanced work.

This data annotation partner checklist will help you find and build a partnership that will enhance your AI models and expand your AI support solutions.

- Domain expertise

- Industry-specific knowledge

- Scalable teams and infrastructure

- Quality assurance and review pipelines

- Hybrid/HITL (human-in-the-loop data annotation systems)

Global Response is among the best data annotation companies for outsourcing. Our expert multilingual support in 8 global locations supports a variety of industries with the support of leading technology solutions.

Key criteria to evaluate BPO providers for annotation

It’s easy to get lost in the fuss of a meeting as you scope out potential annotation BPO providers. Keep track of these key data annotation partner criteria to anticipate the success of a partnership with each BPO on your roster.

- Data security and compliance standards (HIPAA, GDPR, etc.)

- Ask how BPO providers handle sensitive data to determine their commitment to regulation adherence.

- Multi-language and cultural context capabilities

- Ask how they implement multilingual data annotation for NLP and how they mitigate bias in various situations.

- Tool compatibility and API integrations

- Demo the tools they’re offering. Ask how tools integrate with your current tech stack and ensure accuracy of annotator performance.

- Workforce training and transparency

- Review proposed training plans, projected speed to proficiency, performance metrics tracked, and continuous training efforts.

Download our Key Traits of Top Data Annotation BPOs Checklist now to help evaluate which BPO partner to choose.

Outsourced data annotation case study

Ancera, a software analytics company for the poultry industry, struggled to process data with existing imaging operations. The ML team lacked the skills to filter and optimize the model’s performance. Manual data and model workflows slowed development to 3 to 4 months instead of the desired weeks-long process.

Ancera turned to Voxel51, a data annotation BPO provider who implemented FiftyOne Enterprise to streamline data curation and more accurately classify and isolate objects in datasets. The tech integration maintained high-quality training and tested datasets across diverse imaging conditions, analyzing false positives and negatives, confusion matrices, and model scores.

The collaboration provided instant visibility into model predictions and label quality, allowing the team to review and relabel 20,000 detections across hundreds of images in just a few days. The solution shrunk the feedback loop from months to days and improved model performance by 7%.

Conclusion

The annotation layer is the foundation of AI success. High-quality data annotation removes bias and balances datasets to teach AI algorithms how to understand and replicate human language. Choosing the right BPO partner offers businesses scalable, cost-efficient, expert multilingual support and access to cutting-edge technology to streamline operations and improve service quality.

Explore how our BPO annotation team can supercharge your AI pipeline. Contact Global Response for a free quote today.

FAQs

Data annotation plays a key role in AI model success. Data annotation ensures models can learn from images, videos, text, and audio and establishes the accuracy and fairness of machine learning and content generation capabilities.

The choice to outsource or keep data annotation in-house depends on project size, security needs, and budget constraints. In-house data annotation provides direct oversight suitable and security for simple tasks, but it is costly and difficult to scale. Outsourcing is ideal for complicated tasks, large datasets, and urgent projects. Outsourcing is the better choice for businesses looking to scale operations while reducing costs.

Industries that benefit most from outsourcing data annotation include healthcare, retail, automotive, security, finance, manufacturing, and climate science.

BPOs ensure annotation quality at scale by implementing comprehensive quality control measures, balancing datasets, removing bias, utilizing expert tools and infrastructure to manage training data, and implementing repetitive and exhaustive labeling processes.

Yes, data annotation BPO providers can securely handle regulated or sensitive data such as names, emails, payment information, health records, and financial data.

Data annotation costs vary according to size of the project, type of data annotated, and services and discounts offered. Generally, outsourced data annotation is priced from $0.015 to $0.08 per annotation, with variable pricing depending on project size. Contact a data annotation BPO to get a personalized quote.