Read Time: 8 minutes

Table of Contents

Introduction

Imagine for a moment your brand at the center of a social media firestorm. You have a big product you’re trying to launch, so you’re front and center with all interested eyes on your company. During the launch, a user leaves an offensive message in your Instagram live chat. Even though the message is only visible for a few seconds before you were able to delete it, that’s still long enough for people to take screenshots, and regardless of whether it’s your fault or not, your branding does not look good next to offensive material, which can lead to backlash, lost business, and various PR woes that are a headache to deal with.

Scenarios like these are real, but they are also easily preventable through proper chat moderation. That doesn’t just mean you’re filtering out profanity or stopping spam. It allows you to defend your brand’s reputation and preserve trust in your customers.

Key takeaways

- Consistency: Chat moderation is essential to a consistent, trusted omnichannel customer experience.

- Good moderation requires AI and humans together: AI alone can’t handle the nuance of live customer interactions. Human moderation fills the gaps.

- Benefits of great chat moderation: Strategic chat moderation ensures compliance, brand protection, and customer safety.

- What to look for in an outsourced chat moderation partner: Choosing the right outsourced chat moderation partner brings scale, global expertise, and 24/7 coverage.

What are chat moderation services in omnichannel CX?

Chat moderation services are an excellent way to make sure that customer conversations are meeting the expectations of your community, the legal standards where you do business, and your individual brand guidelines, regardless of the digital platform where the communication is taking place. In the context of BPO, chat moderation services can entail either real-time or delayed review of messages to identify and remove any content that is potentially harmful, abusive, or counter to your brand’s message.

Types of moderated channels

Various channels can be moderated to help make the customer experience on all of your online platforms positive and ensure that everything stays on-topic, safe, and helpful. Some of the channels you can moderate include:

- Webchat: Ensure that all communication through your on-site live chat tools stays on-brand and free of malicious messages, allowing for a more effective and welcoming customer experience.

- Messaging apps: Monitor and moderate the DMs on your official messaging app accounts, from WhatsApp to Facebook Messenger, and other DM-based customer engagement tools.

- Social platforms: Foster a strong, helpful, and compassionate community on your social platforms by moderating Instagram comments, TikTok replies, Twitch chat, and more.

- In-app/live event chats: Stay on top of customer chats within your own mobile apps or during webinars and other live events with real-time discussion.

Why brand trust depends on real-time moderation

A lot can happen in a short amount of time when it comes to user-generated content, and if you aren’t paying attention when it does, whatever happens could be at the expense of your customers or your brand.

Customer perception & brand safety

When moderation is either inconsistent or missing entirely, it creates an environment that is prone to off-brand messaging, but also, even worse, abuse and harassment. As a brand offering a platform for public discussion and user-generated content, it’s your responsibility to cultivate an environment that not only stays on-brand but also keeps users safe, which is what customers expect, along with clear and respectful communication. When you violate those expectations or allow unsafe material, you damage your brand image and customer loyalty, and ultimately harm people.

By monitoring chats in real time, you can make sure that any inappropriate content is identified and removed before it escalates or spreads.

Impact across channels

Negative interactions aren’t limited to the platform on which they occur. Customers can and will share their experiences from one platform to others, and even if they didn’t, they still walk away from the interaction having had a bad experience with your brand, which will color any future experiences with your brand on any channel, and that’s assuming they stay loyal to your brand at all.

Want to scale your business?

Global Response has a long track record of success in outsourcing customer service and call center operations. See what our team can do for you!

Beyond bots: The case for human-led chat moderation

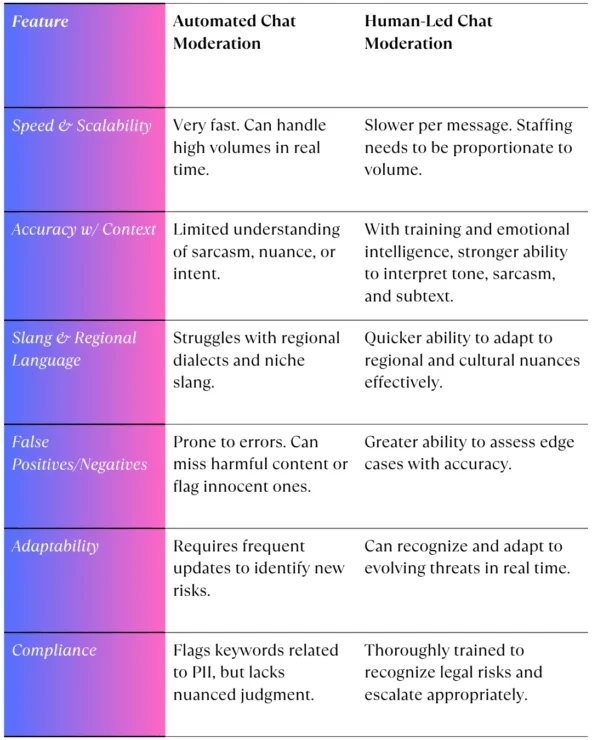

While automated chat moderation is incredibly useful and effective, there will always be a need for a human touch. Here’s why.

Where automation falls short

Though AI chat moderation has evolved in major ways, providing new levels of speed and scalability, there are still critical limitations to the technology.

- Computers like clarity, and communication isn’t always clear: We, as humans, often don’t say precisely what we mean, whether on purpose or unintentionally. Though automated tools have gotten better at deciphering us, coded language like sarcasm, innuendo, vague cultural references, or even dog-whistle hate speech can still go over an AI moderator’s head, which makes human-led chat moderation all the more important.

- False positives or negatives: AI moderation is prone to false positives and false negatives, which means that harmless content might get flagged, while a genuinely problematic message slips through.

- Regional slang and linguistic nuances: Because AI moderators are only as good as the datasets they are trained on, they might make the wrong decision based on a misunderstanding of regional slang or incomplete training on the nuances of certain languages. These missteps are damaging to the customer experience and risk your brand image if left unaddressed.

Benefits of human moderation

Human moderation, on the other hand, is still incredibly useful at combating the issues above. A well-trained human moderator operates with both emotional intelligence and contextual awareness in every customer interaction. This allows them to read between the lines, interpreting the intent of different users, and make informed decisions to moderate conversations appropriately.

Humans are still adept at making distinctions in the tone of interactions, allowing them to interpret language more appropriately, and de-escalate tense conversations with empathy, identify (and put a stop to) harmful patterns, and offer a level of human experience and intuition that AI alone can’t provide.

How outsourced moderation strengthens global compliance & cultural sensitivity

Meeting Standards Like GDPR & COPPA

Chat moderation is more than just how your brand is perceived, or even your ethical duty to protect your customers. Moderation is also essential for regulatory compliance reasons. It’s all too possible for interactions in chats to land your company in tricky legal territory, leaving you vulnerable to fines and even lawsuits.

A good outsourced moderation team will be thoroughly trained at spotting personally identifiable information (PII) and removing it to keep the vulnerable parties safe, flagging and removing content that violates child protection laws like COPPA, and making sure that conversations on your platform adhere strictly to the data privacy requirements provided by regulations like GDPR.

Multilingual and regional context matters

Even after compliance is ensured, cultural sensitivity and fluency should still be carefully considered in each interaction. When working in a global market, it’s important that your content moderation goes beyond simple translation. For any region you do business with, it’s imperative that you have a good understanding of the local customs and communication styles, as well as knowing which issues hold the most local sensitivity.

A good outsourced moderation team knows that regional fluency and cultural awareness are critical for identifying content that is not just generally inappropriate, but might also carry unique weight in local markets, given the context of local culture.

READ MORE: Multilingual Support: Expanding Reach with Retail Call Center Services

Choosing the right chat moderation partner for omnichannel support

To better evaluate potential outsourcing partners when looking for omnichannel moderation support, it’s important to know exactly what you’re looking for the best results. Here is a quick cheat sheet to consult when weighing options for the best outsourced chat moderation.

Your ideal partner will offer:

- 24/7 real-time chat monitoring across all relevant channels

- Blended AI and human moderation to give you the best of both speed and nuance

- Multilingual and high-volume support capabilities

- Experience with moderation for social and webchat channels

- Transparency in the reporting and escalation processes

- Strict compliance with international and relevant local and industry privacy and data protection laws

Final thoughts

Regardless of where your customers are chatting, the interactions that they have with your brand or their fellow customers go a long way to shape their perception and sentiment of the brand as a whole. For that reason, it’s crucial to think of chat moderation as much more than just a tech feature, but as a strategic investment in your brand perception and the trust of your customers.

Ready to enhance customer trust at every touchpoint? Talk to our chat moderation experts.